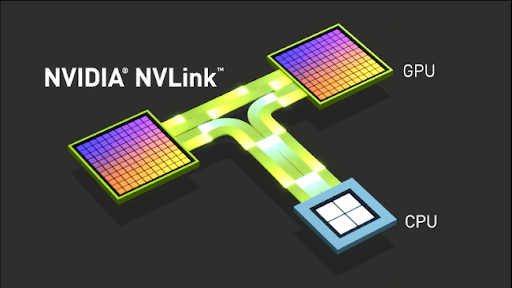

NVIDIA has released NVLink Fusion, a brand new era that shall we organizations build custom AI chips by means of combining one-of-a-kind elements like GPUs and AI processors on a single chip. This makes AI structures quicker, greater efficient, and less complicated to personalize for distinctive uses like cloud computing, self-riding vehicles, and smart gadgets. NVLink Fusion is ready to change how AI hardware is made starting in 2025.

Table of Contents

What NVLink Fusion Is

NVLink Fusion is NVIDIA’s new on-bundle connector—think of it as amazing glue for chiplets.

- Speed: 3 TB/s of -way bandwidth, 50 % greater than NVLink 4.0.

- Openness: NVIDIA is publishing the entire PHY and protocol so 1/3-birthday party tiles can “snap” in next to NVIDIA GPU tiles.

- Flexibility: GPUs, CPUs, memory blocks, or home-grown AI accelerators can all percentage one substrate, cutting board region and latency.

Why Custom AI Silicon Now?

Different industries want distinctive AI muscles.

- Cloud: Huge language-model throughput.

- Edge & IoT: Tiny, low-power input.

- Automotive: Real-time Vision Plus Safety Logic.

Without a fusion, the companies overwhelm the generic GPU or a completely adapted to SOC. NVLink Fusion offers a center trail: Build the tile you just need, place it next to the NVIDIA GPU, and save about 40 % power while trimming your training time by ~ 30 % for low data.

Software Still Feels Familiar

Hardware changes are useless when the software breaks.

- CUDA 13 will be sent with a merger therapy that hides the tile map.

- Pytorch, Tensorflow, and Jax run unchanged, but the current participants can call the accelerator.

- Initial tests show GPT-4 class models, such as scaling in 256 melted tiles per node with zero code rewriting.

In silicon’s dance, the future calls, Tiles unite behind high walls. Speed and power blend as one, Custom AI has just begun.

Early Adopters & Industry Buzz

- Amazon Web Services: Fusion Test in Next-Gyne Trainyum Cloud Institute.

- Mercedes-Benz: Fusing an in their driving core with NVIDIA GPUs for 2027 cars.

- Open Compute Project: Fusion was added in the 2025 server as “Key to Open Lapet Ecosystems”.

Gartner estimates that one of the three AI servers shown by 2028 will host at least one non-NVIDIA tile at NVLink Fusion.

Availability & Licensing

| Milestone | Date | Details |

|---|---|---|

| Early access kit | Q4 2025 | Reference flows for TSMC 3 nm & Samsung SF2; Fusion Switch for rack links |

| General licensing | H1 2026 | Foundry-agnostic; compliance program for “Fusion-Ready” badge |

| Cost model | Royalty per tile | Similar to Arm—low entry for startups and giants alike |

Impact on the AI Ecosystem

NVLink Fusion should change how AI hardware is designed and sold.

It encourages a chiplet surroundings in which groups create specialized elements that fit into a shared popular.

This allows smaller players innovate with out building whole chips from scratch, whilst huge agencies can speedy personalize hardware for precise workloads.

Overall, this modular method may additionally accelerate AI improvement and decrease industry-wide expenses industry-extensive.

Challenges Ahead

Though promising, NVLink Fusion faces a few hurdles.

- Manufacturing those complex multi-tile chips calls for a new packaging era and delivery chains.

- Ensuring complete software compatibility and debugging across many companions’ tiles will be problematic.

- Adoption depends on convincing large cloud and tool makers to shift from monolithic chips to modular designs.

However, NVIDIA’s robust environment and early associate help advise these challenges may be overcome in the coming years.

The Bigger Picture

AI workloads hold exploding—from trillion-parameter LLMs to aspect imaginative and prescient in drones—whilst energy budgets remain tight. NVLink Fusion turns NVIDIA from a natural GPU dealer into the spine of a modular AI hardware era. Servers, cars, and manufacturing unit rigs delivery in 2026 will, in all likelihood, appear more like LEGO mosaics of specialized blocks, all buzzing together via Fusion.

NVIDIA’s bet is clear: open the door to custom AI silicon, preserve the beloved CUDA software program stack intact, and permit innovation snap into location—one tile at a time.